Apache Oozie - Big Data Workflow Engine

As you know Hadoop is used for handling huge data set. Sometime one job

may not be sufficient for doing all required task. Better you go more number of

jobs and the get output of one job as input for other job. Finally when N number

of job completed you will get the appropriate solution. Also there should be a

co-ordination between these N numbers of jobs. Thus Oozie takes care the co-ordination

between these job. Its co-ordinate these job and take output from job and

passing this as input to another job. Thus you can say Oozie is a workflow scheduler engine which co-ordinate between N number of Job as defined in its configuration XML file. For running Oozie the BOOTSTRAP service should be available.

Here in the above diagram, there are three jobs A, B & C. The output of Job A is the input of Job B. Similarly the output of Job B is the input of Job C.

Thus, Anyone can define Apache Oozie as following.

Oozie is a workflow scheduler system to manage Apache Hadoop jobs.

Oozie Workflow jobs are Directed Acyclical Graphs (DAG) of actions.

Oozie Coordinator jobs are recurrent Oozie Workflow jobs triggered by time (frequency) and data availabilty.

Oozie is integrated with the rest of the Hadoop stack supporting several types of Hadoop jobs out of the box (such as Java map-reduce, Streaming map-reduce, Pig, Hive, Sqoop and Distcp) as well as system specific jobs (such as Java programs and shell scripts).

Oozie is a scalable, reliable and extensible system.

Oozie in the Hadoop ecosystem

The backbone of Oozie configuration is workflow XML file. The workflow.xml file will

have all configuration of N number of the jobs. Basically it is having two node. First one is control node and second one is action node. Control node has the job list and the sequence of the execution. Action nodes will have Job's action details. Thus one job will have one action tag for the same. There will be one control tag but N number of action tags that depends on the number of jobs you are performing.

Installation and Configuration

Oozie Installation on CentOS 6

We use official CDH

repository from cloudera’s site to install CDH4. Go to official CDH download section

and download CDH4 (i.e. 4.6) version or you can also use following wget command to download the

repository and install it.

For OS 32 Bit

$ wget http://archive.cloudera.com/cdh4/one-click-install/redhat/6/i386/cloudera-cdh-4-0.i386.rpm

$ yum --nogpgcheck localinstall cloudera-cdh-4-0.i386.rpm

For OS 64 Bit

$ wget http://archive.cloudera.com/cdh4/one-click-install/redhat/6/x86_64/cloudera-cdh-4-0.x86_64.rpm

$ yum --nogpgcheck localinstall

cloudera-cdh-4-0.x86_64.rpm

After adding CDH repository,

execute below commands to install Oozie onto the machine

[root@kingsolomon

~]# yum install oozie

However above command should

cover Oozie client installation. If not the execute below command to install Oozie

client.

[root@kingsolomon

~]# yum install oozie-client

Oozie has been installed onto the machine. Now we will configure the Oozie onto the machine.

Oozie Configuration on CentOS 6

As Oozie does not directly interact with Hadoop, we need to

do the configuration accordingly.

Note : Please configure all the settings when Oozie is not up

and running.

Oozie has ‘Derby‘ as default built in DB however, I would recommend to use MySQL.

[root@kingsolomon ~]$ mysql -u root -p

Enter password:

Welcome to the

MySQL monitor. Commands end with ; or

\g.

Your MySQL

connection id is 3

Server version:

5.5.38 MySQL Community Server (GPL)

Copyright (c)

2000, 2014, Oracle and/or its affiliates. All rights reserved.

Oracle is a

registered trademark of Oracle Corporation and/or its

affiliates.

Other names may be trademarks of their respective

owners.

Type 'help;' or

'\h' for help. Type '\c' to clear the current input statement.

mysql> create database oozie;

Query OK, 1 row

affected (0.00 sec)

mysql> grant all privileges on oozie.* to

'oozie'@'localhost' identified by 'oozie';

Query OK, 0

rows affected (0.00 sec)

mysql> grant all privileges on oozie.* to

'oozie'@'%' identified by 'oozie';

Query OK, 0

rows affected (0.00 sec)

mysql> exit

Bye

|

Now Configure MySQL related configuration into oozie-site.xml file

[root@kingsolomon ~]# cd /etc/oozie/conf

[root@kingsolomon ~]# vi oozie-site.xml

Add the following properties.

<property>

<name>oozie.service.JPAService.jdbc.driver</name>

<value>com.mysql.jdbc.Driver</value>

</property>

<property>

<name>oozie.service.JPAService.jdbc.url</name>

<value>jdbc:mysql://master:3306/oozie</value>

</property>

<property>

<name>oozie.service.JPAService.jdbc.username</name>

<value>root</value>

</property>

<property>

<name>oozie.service.JPAService.jdbc.password</name>

<value>root</value>

</property>

|

Download and add the MySQL

JDBC connectivity driver JAR to

Oozie lib directory.

[root@kingsolomon Downloads]# cp mysql-connector-java-5.1.31-bin.jar

/var/lib/oozie/

Create Oozie database schema by executing below commands

[root@kingsolomon ~]# sudo -u oozie /usr/lib/oozie/bin/ooziedb.sh

create -run

Sample Output will be

setting

OOZIE_CONFIG=/etc/oozie/conf

setting

OOZIE_DATA=/var/lib/oozie

setting

OOZIE_LOG=/var/log/oozie

setting

OOZIE_CATALINA_HOME=/usr/lib/bigtop-tomcat

setting

CATALINA_TMPDIR=/var/lib/oozie

setting

CATALINA_PID=/var/run/oozie/oozie.pid

setting

CATALINA_BASE=/usr/lib/oozie/oozie-server-0.20

setting

CATALINA_OPTS=-Xmx1024m

setting

OOZIE_HTTPS_PORT=11443

setting

OOZIE_HTTPS_KEYSTORE_PASS=password

......

Validate DB Connection

DONE

Check DB schema does not exist

DONE

Check OOZIE_SYS table does not exist

DONE

Create SQL schema

DONE

Create OOZIE_SYS table

DONE

Set MySQL MEDIUMTEXT flag

DONE

Oozie DB has been created for Oozie version

'3.3.2-cdh4.7.1'

The SQL commands have been written to:

/tmp/ooziedb-3060871175627729254.sql

Download ExtJS lib then extract

the contents of the file to /var/lib/oozie/ on the same host as the Oozie

Server.

[root@kingsolomon Downloads]# unzip ext-2.2.zip

[root@kingsolomon Downloads]# mv ext-2.2 /var/lib/oozie/

Make sure MySQL service is up and

running before executing Oozie start command.

[root@kingsolomon

Desktop]# service mysqld start

Starting mysqld:

[ OK ]

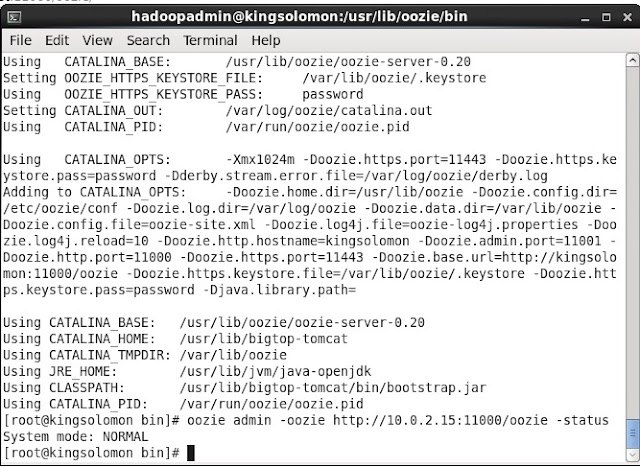

Start Oozie server by executing

below commands.

[root@kingsolomon

Desktop]# service oozie start

Sample Output will be

Setting OOZIE_HOME: /usr/lib/oozie

Sourcing:

/usr/lib/oozie/bin/oozie-env.sh

setting

OOZIE_CONFIG=/etc/oozie/conf

setting

OOZIE_DATA=/var/lib/oozie

setting

OOZIE_LOG=/var/log/oozie

setting

OOZIE_CATALINA_HOME=/usr/lib/bigtop-tomcat

setting

CATALINA_TMPDIR=/var/lib/oozie

setting

CATALINA_PID=/var/run/oozie/oozie.pid

setting

CATALINA_BASE=/usr/lib/oozie/oozie-server-0.20

setting

CATALINA_OPTS=-Xmx1024m

setting

OOZIE_HTTPS_PORT=11443

setting

OOZIE_HTTPS_KEYSTORE_PASS=password

....

Using CATALINA_BASE:

/usr/lib/oozie/oozie-server-0.20

Using CATALINA_HOME:

/usr/lib/bigtop-tomcat

Using CATALINA_TMPDIR: /var/lib/oozie

Using JRE_HOME:

/usr/lib/jvm/java-openjdk

Using CLASSPATH:

/usr/lib/bigtop-tomcat/bin/bootstrap.jar

Using CATALINA_PID:

/var/run/oozie/oozie.pid

Verify the Oozie Server Status

[root@kingsolomon Desktop]# service oozie status

running

[root@kingsolomon

Desktop]# oozie admin -oozie

http://kingsolomon:11000/oozie -status

System

mode: NORMAL

Open Oozie Web Console from the browser using http://kingsolomin:11000/oozie link

Hope

you have enjoyed the article.

Author : Iqubal

Mustafa Kaki, Technical

Specialist

Want to connect with me

If you want to connect with me, please connect through my email - iqubal.kaki@gmail.com